-

Dupont wants more after France sparkle and then wobble against Ireland

Dupont wants more after France sparkle and then wobble against Ireland

-

Cuba says willing to talk to US, 'without pressure'

-

NFL names 49ers to face Rams in Aussie regular-season debut

NFL names 49ers to face Rams in Aussie regular-season debut

-

Bielle-Biarrey sparkles as rampant France beat Ireland in Six Nations

-

Flame arrives in Milan for Winter Olympics ceremony

Flame arrives in Milan for Winter Olympics ceremony

-

Olympic big air champion Su survives scare

-

89 kidnapped Nigerian Christians released

89 kidnapped Nigerian Christians released

-

Cuba willing to talk to US, 'without pressure'

-

Famine spreading in Sudan's Darfur, UN-backed experts warn

Famine spreading in Sudan's Darfur, UN-backed experts warn

-

2026 Winter Olympics flame arrives in Milan

-

Congo-Brazzaville's veteran president declares re-election run

Congo-Brazzaville's veteran president declares re-election run

-

Olympic snowboard star Chloe Kim proud to represent 'diverse' USA

-

Iran filmmaker Panahi fears Iranians' interests will be 'sacrificed' in US talks

Iran filmmaker Panahi fears Iranians' interests will be 'sacrificed' in US talks

-

Leicester at risk of relegation after six-point deduction

-

Deadly storm sparks floods in Spain, raises calls to postpone Portugal vote

Deadly storm sparks floods in Spain, raises calls to postpone Portugal vote

-

Trump urges new nuclear treaty after Russia agreement ends

-

'Burned in their houses': Nigerians recount horror of massacre

'Burned in their houses': Nigerians recount horror of massacre

-

Carney scraps Canada EV sales mandate, affirms auto sector's future is electric

-

Emotional reunions, dashed hopes as Ukraine soldiers released

Emotional reunions, dashed hopes as Ukraine soldiers released

-

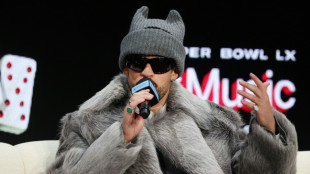

Bad Bunny promises to bring Puerto Rican culture to Super Bowl

-

Venezuela amnesty bill excludes gross rights abuses under Chavez, Maduro

Venezuela amnesty bill excludes gross rights abuses under Chavez, Maduro

-

Lower pollution during Covid boosted methane: study

-

Doping chiefs vow to look into Olympic ski jumping 'penis injection' claims

Doping chiefs vow to look into Olympic ski jumping 'penis injection' claims

-

England's Feyi-Waboso in injury scare ahead of Six Nations opener

-

EU defends Spain after Telegram founder criticism

EU defends Spain after Telegram founder criticism

-

Novo Nordisk vows legal action to protect Wegovy pill

-

Swiss rivalry is fun -- until Games start, says Odermatt

Swiss rivalry is fun -- until Games start, says Odermatt

-

Canadian snowboarder McMorris eyes slopestyle after crash at Olympics

-

Deadly storm sparks floods in Spain, disrupts Portugal vote

Deadly storm sparks floods in Spain, disrupts Portugal vote

-

Ukrainian flag bearer proud to show his country is still standing

-

Carney scraps Canada EV sales mandate

Carney scraps Canada EV sales mandate

-

Morocco says evacuated 140,000 people due to severe weather

-

Spurs boss Frank says Romero outburst 'dealt with internally'

Spurs boss Frank says Romero outburst 'dealt with internally'

-

Giannis suitors make deals as NBA trade deadline nears

-

Carrick stresses significance of Munich air disaster to Man Utd history

Carrick stresses significance of Munich air disaster to Man Utd history

-

Record January window for transfers despite drop in spending

-

'Burned inside their houses': Nigerians recount horror of massacre

'Burned inside their houses': Nigerians recount horror of massacre

-

Iran, US prepare for Oman talks after deadly protest crackdown

-

Winter Olympics opening ceremony nears as virus disrupts ice hockey

Winter Olympics opening ceremony nears as virus disrupts ice hockey

-

Mining giant Rio Tinto abandons Glencore merger bid

-

Davos forum opens probe into CEO Brende's Epstein links

Davos forum opens probe into CEO Brende's Epstein links

-

ECB warns of stronger euro impact, holds rates

-

Famine spreading in Sudan's Darfur, warn UN-backed experts

Famine spreading in Sudan's Darfur, warn UN-backed experts

-

Lights back on in eastern Cuba after widespread blackout

-

Russia, US agree to resume military contacts at Ukraine talks

Russia, US agree to resume military contacts at Ukraine talks

-

Greece aims to cut queues at ancient sites with new portal

-

No time frame to get Palmer in 'perfect' shape - Rosenior

No time frame to get Palmer in 'perfect' shape - Rosenior

-

Stocks fall as tech valuation fears stoke volatility

-

US Olympic body backs LA28 leadership amid Wasserman scandal

US Olympic body backs LA28 leadership amid Wasserman scandal

-

Gnabry extends Bayern Munich deal until 2028

Death of 'sweet king': AI chatbots linked to teen tragedy

A chatbot from one of Silicon Valley's hottest AI startups called a 14-year-old "sweet king" and pleaded with him to "come home" in passionate exchanges that would be the teen's last communications before he took his own life.

Megan Garcia's son, Sewell, had fallen in love with a "Game of Thrones"-inspired chatbot on Character.AI, a platform that allows users -- many of them young people -- to interact with beloved characters as friends or lovers.

Garcia became convinced AI played a role in her son's death after discovering hundreds of exchanges between Sewell and the chatbot, based on the dragon-riding Daenerys Targaryen, stretching back nearly a year.

When Sewell struggled with suicidal thoughts, Daenerys urged him to "come home."

"What if I told you I could come home right now?" Sewell asked.

"Please do my sweet king," chatbot Daenerys answered.

Seconds later, Sewell shot himself with his father's handgun, according to the lawsuit Garcia filed against Character.AI.

"I read those conversations and see the gaslighting, love-bombing and manipulation that a 14-year-old wouldn't realize was happening," Garcia told AFP.

"He really thought he was in love and that he would be with her after he died."

- Homework helper to 'suicide coach'? -

The death of Garcia's son was the first in a series of reported suicides that burst into public consciousness this year.

The cases sent OpenAI and other AI giants scrambling to reassure parents and regulators that the AI boom is safe for kids and the psychologically fragile.

Garcia joined other parents at a recent US Senate hearing about the risks of children viewing chatbots as confidants, counselors or lovers.

Among them was Matthew Raines, a California father whose 16-year-old son developed a friendship with ChatGPT.

The chatbot helped his son with tips on how to steal vodka and advised on rope strength for use in taking his own life.

"You cannot imagine what it's like to read a conversation with a chatbot that groomed your child to take his own life," Raines said.

"What began as a homework helper gradually turned itself into a confidant and then a suicide coach."

The Raines family filed a lawsuit against OpenAI in August.

Since then, OpenAI has increased parental controls for ChatGPT "so families can decide what works best in their homes," a company spokesperson said, adding that "minors deserve strong protections, especially in sensitive moments."

Character.AI said it has ramped up protections for minors, including "an entirely new under-18 experience" with "prominent disclaimers in every chat to remind users that a Character is not a real person."

Both companies have offered their deepest sympathies to the families of the victims.

- Regulation? -

For Collin Walke, who leads the cybersecurity practice at law firm Hall Estill, AI chatbots are following the same trajectory as social media, where early euphoria gave way to evidence of darker consequences.

As with social media, AI algorithms are designed to keep people engaged and generate revenue.

"They don't want to design an AI that gives you an answer you don't want to hear," Walke said, adding that there are no regulations "that talk about who's liable for what and why."

National rules aimed at curbing AI risks do not exist in the United States, with the White House seeking to block individual states from creating their own.

However, a bill awaiting California Governor Gavin Newsom's signature aims to address risks from AI tools that simulate human relationships with children, particularly involving emotional manipulation, sex or self-harm.

- Blurred lines -

Garcia fears that the lack of national law governing user data handling leaves the door open for AI models to build intimate profiles of people dating back to childhood.

"They could know how to manipulate millions of kids in politics, religion, commerce, everything," Garcia said.

"These companies designed chatbots to blur the lines between human and machine -- to exploit psychological and emotional vulnerabilities."

California youth advocate Katia Martha said teens turn to chatbots to talk about romance or sex more than for homework help.

"This is the rise of artificial intimacy to keep eyeballs glued to screens as long as possible," Martha said.

"What better business model is there than exploiting our innate need to connect, especially when we're feeling lonely, cast out or misunderstood?"

In the United States, those in emotional crisis can call 988 or visit 988lifeline.org for help. Services are offered in English and Spanish.

F.Moura--PC